Summary

A Deterministic, IDE‑Native Way to Author Terraform/OpenTofu Modules

Authoring Infrastructure‑as‑Code (IaC) modules in Terraform or OpenTofu often demands deep mastery of variable namespaces, naming conventions, configuration patterns, security best practices, and collaboration workflows. That complexity slows onboarding, encourages duplication, and can hide subtle errors until staging or production.

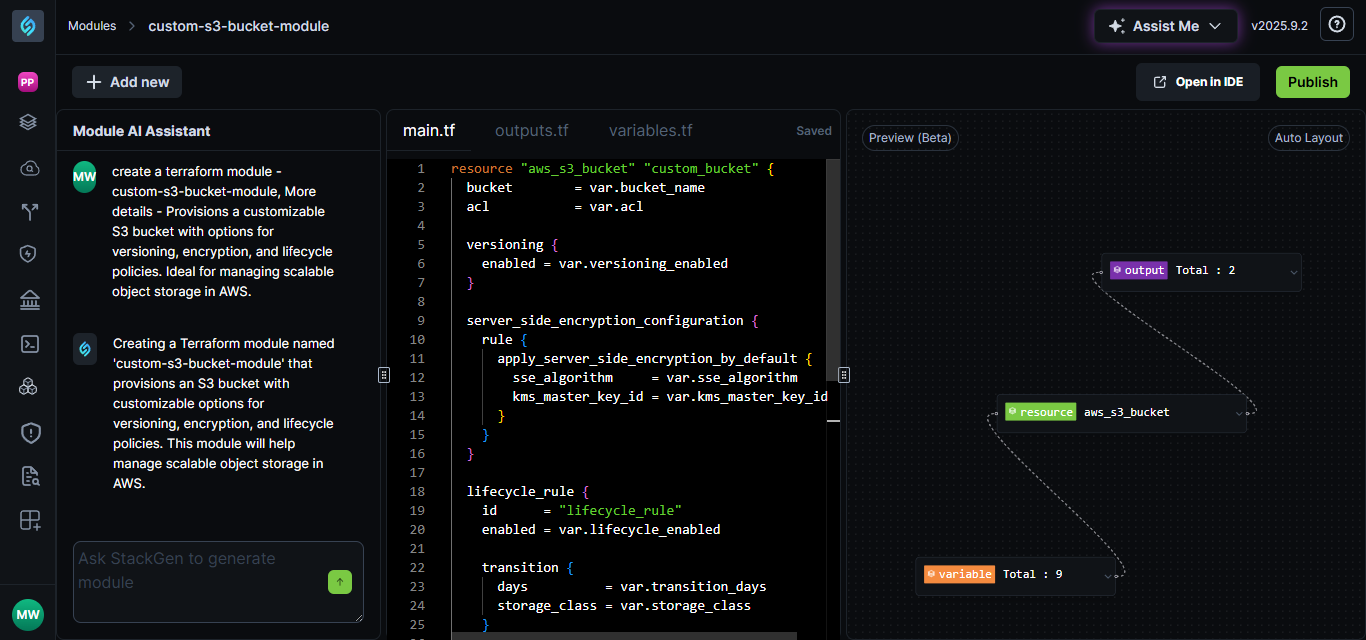

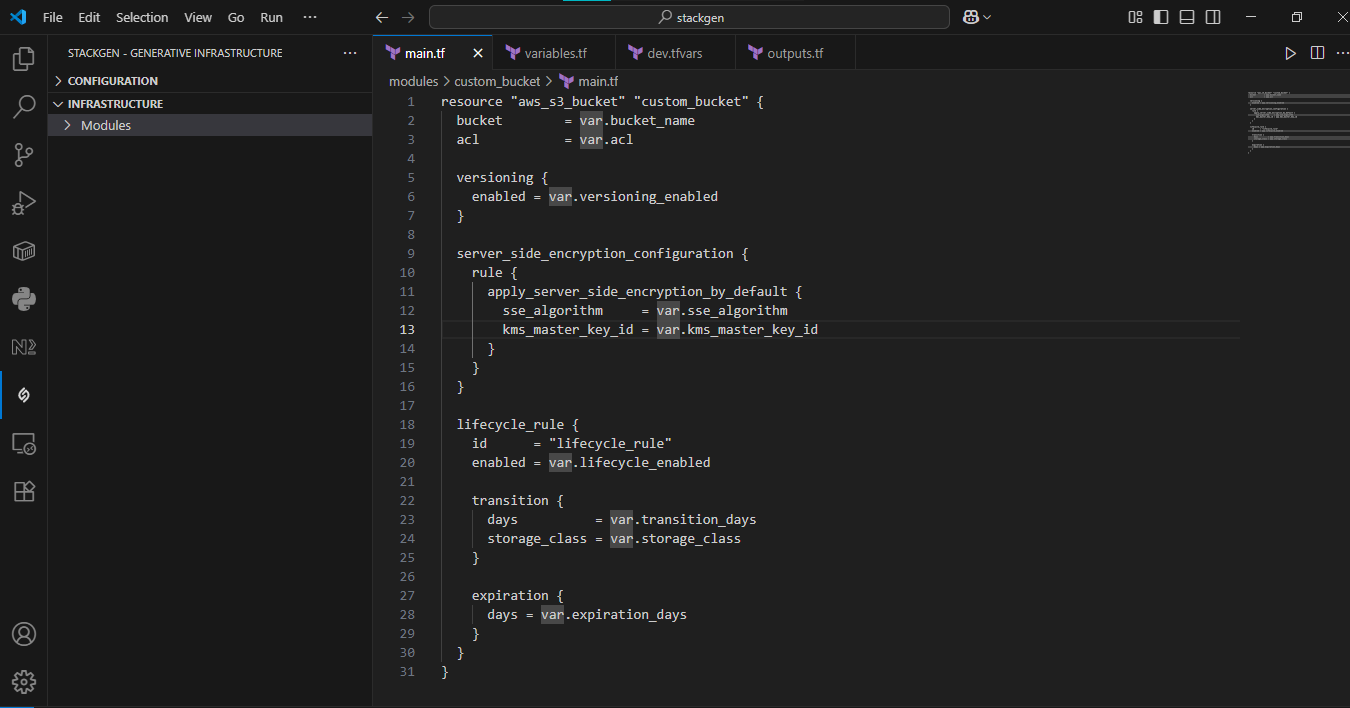

Meet the StackGen AI‑Based Module Editor

The StackGen AI‑Based Module Editor reimagines module authoring with an AI‑augmented experience embedded directly in your IDE (VS Code, Cursor, and compatible forks). It's designed to flatten the learning curve without sacrificing control.

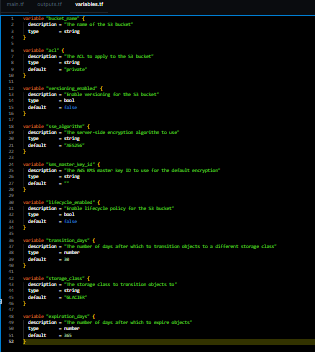

- In‑editor scaffolding: Generate deterministic, reusable module templates from your intent.

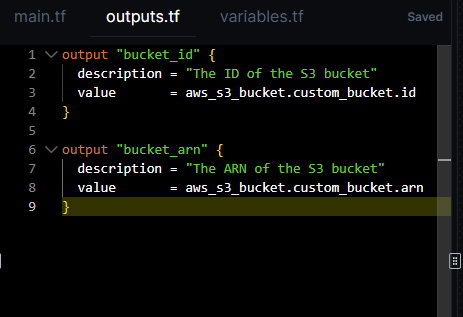

- Context‑aware suggestions: Infer and structure key variables, inputs, and outputs automatically.

- Real‑time validation: Enforce compliance (naming, tagging, security) as you author.

- Stay in your flow: The StackGen extension surfaces everything inline.

For example, when you describe "a secure S3 bucket with logging," StackGen builds a clean, compliant module with the right inputs/outputs and checks baked in.

Key Capabilities

- AI-Augmented IaC Authoring: StackGen's AI-Based Module Editor, integrated directly into VS Code (and forks like Cursor), revolutionizes Terraform/OpenTofu module creation by providing deterministic, template-driven AI assistance that scaffolds, validates, and enforces compliance in real time within the IDE.

- Determinism and Reproducibility: Unlike general-purpose GenAI tools such as ControlMonkey, StackGen guarantees identical, byte-for-byte module outputs for the same input, ensuring reproducibility, auditability, and compliance for production-grade infrastructure.

- IDE-First Productivity: Embedding StackGen in VS Code eliminates the need to switch between tools, enabling inline policy enforcement, linting, and quick-fix diagnostics. This shift-left approach accelerates module delivery and reduces onboarding time for DevOps teams.

- Enterprise-Grade Governance: The extension includes built-in OPA/Rego-style policy enforcement, generation hash validation, and metadata tagging, simplifying code review and CI/CD integration while maintaining strict governance and security controls.

- Migration and Adoption Path: StackGen offers a clear, phased migration plan from manual IaC to AI-assisted module generation, enabling teams to incrementally adopt deterministic AI workflows, integrate validation pipelines, and achieve faster, consistent, and compliant IaC delivery across enterprises.

Determinism over "Vibe Coding"

Many generative‑AI tools produce output that's "close enough." StackGen emphasizes the opposite:

- Repeatable: Outputs are deterministic.

- Auditable: Changes are traceable end‑to‑end.

- Discipline‑first: You stay in control; AI assists, not replaces engineering rigor.

"This isn't vibe coding where AI churns boilerplate and you hope for the best. It's reproducible, reviewable, and production‑ready."

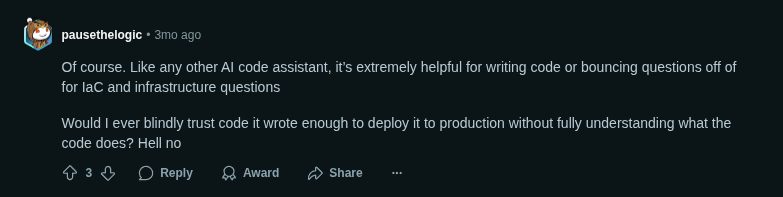

What Practitioners Are Saying

As one Redditor in r/devops put it:

Adoption Is Rising—But Trust Still Matters

According to Stack Overflow's 2025 survey, 84% of developers use or plan to use AI tools. Yet, as IT Pro notes, 46% still don't trust AI accuracy. This gap underlines the need for tools like StackGen that combine AI assistance with predictable outcomes and human oversight.

Why It Matters

- Faster onboarding with opinionated scaffolding

- Fewer production surprises via real‑time validation

- Standardized modules that teams can reuse with confidence

- Auditability that satisfies security and compliance stakeholders

—

If you're authoring Terraform/OpenTofu modules and want reproducibility without losing speed, try the StackGen extension and keep building inside your IDE.