Summary

- Multi-cloud adoption has become mainstream in 2025, with more than 87% of organizations running workloads across multiple providers to avoid vendor lock-in, optimize costs, and access specialized services.

- This shift introduces operational complexity, as teams must maintain visibility across environments, enforce governance, control spending, and ensure workload portability without relying on ad hoc tooling.

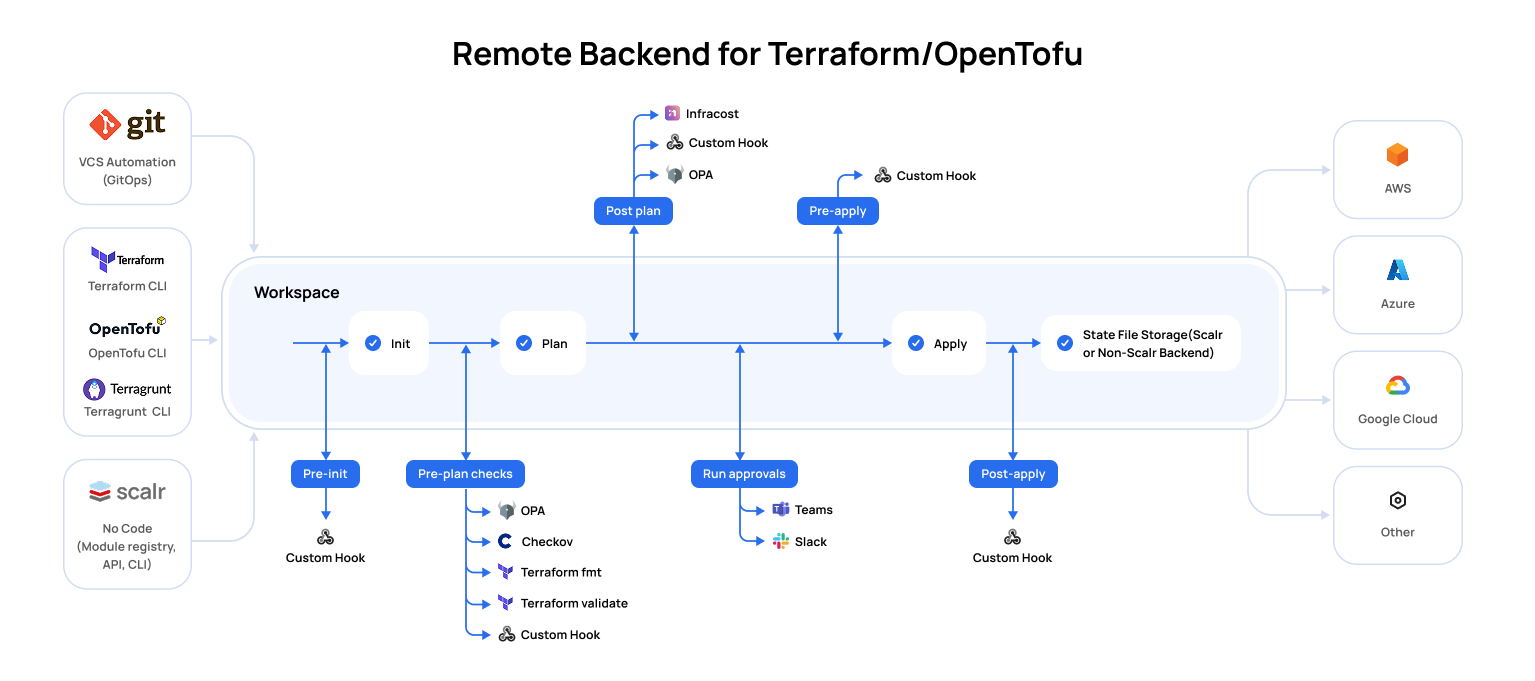

- Multi-cloud management platforms address these challenges by offering a unified interface for provisioning, governance, monitoring, and cost visibility, enabling consistency across AWS, Azure, and Google Cloud.

- Key evaluation criteria for selecting a platform include governance depth, cost management capabilities, alignment with developer workflows, observability features, and integration maturity.

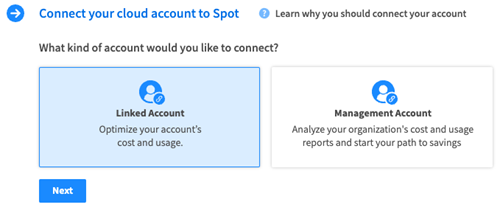

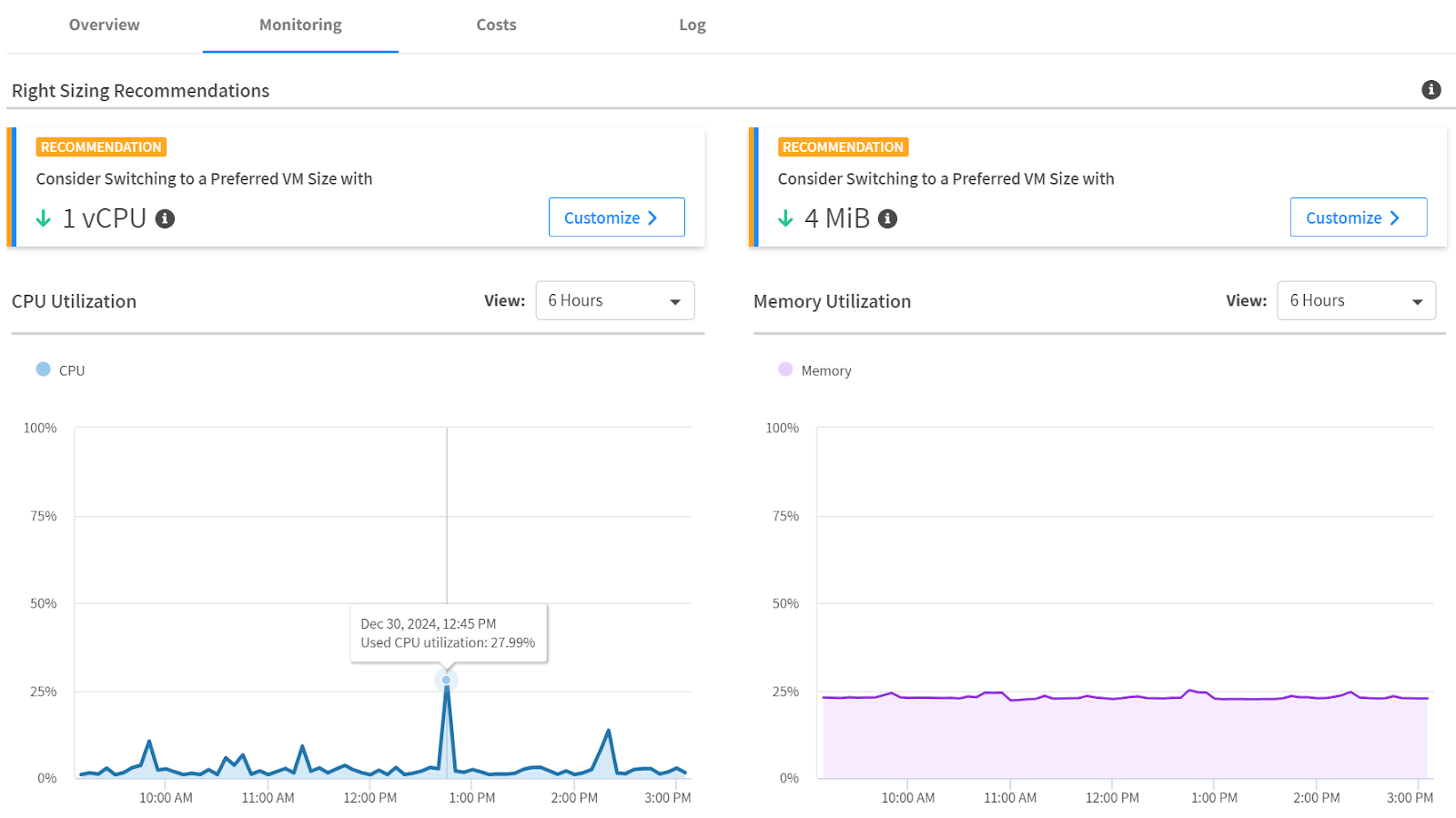

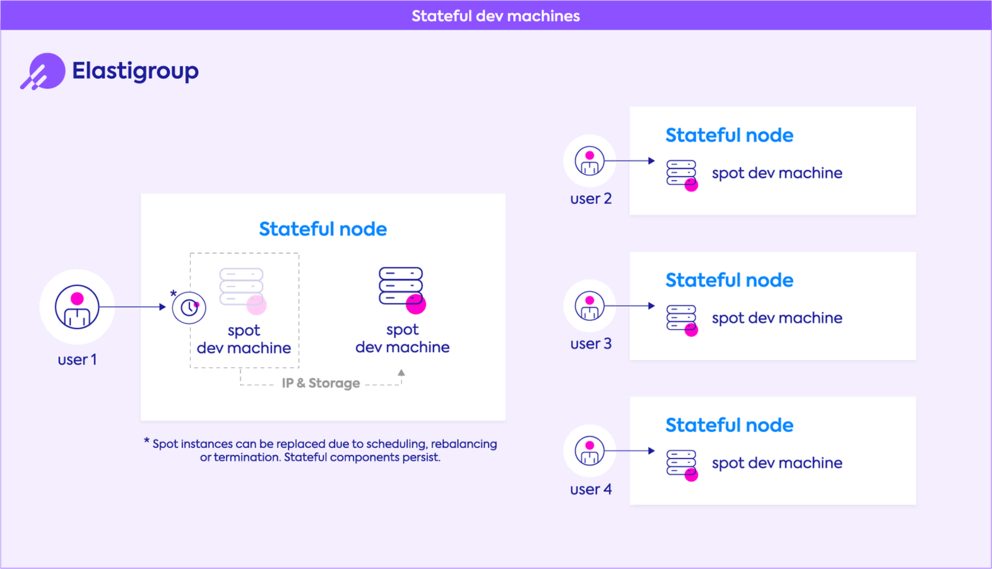

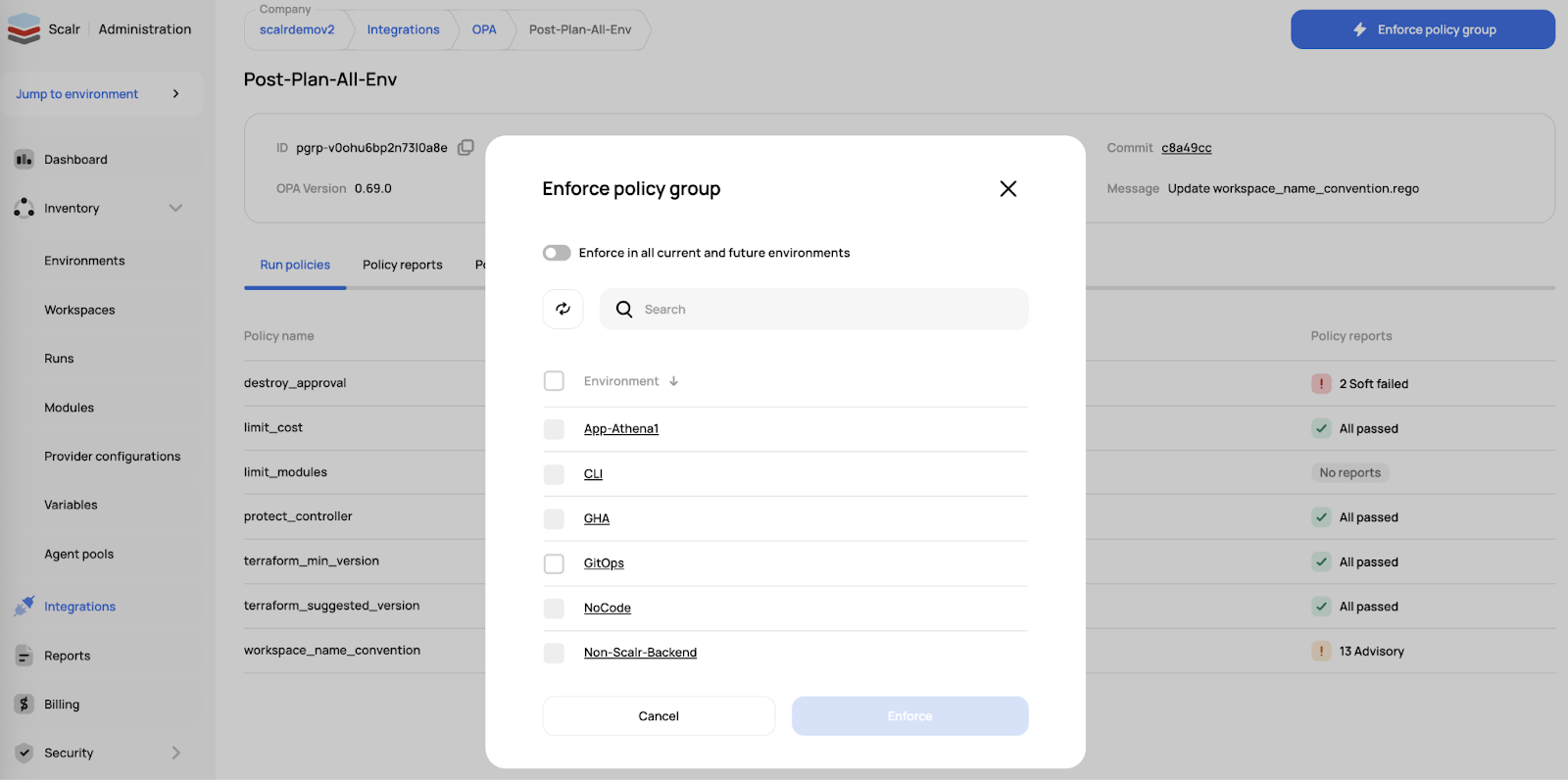

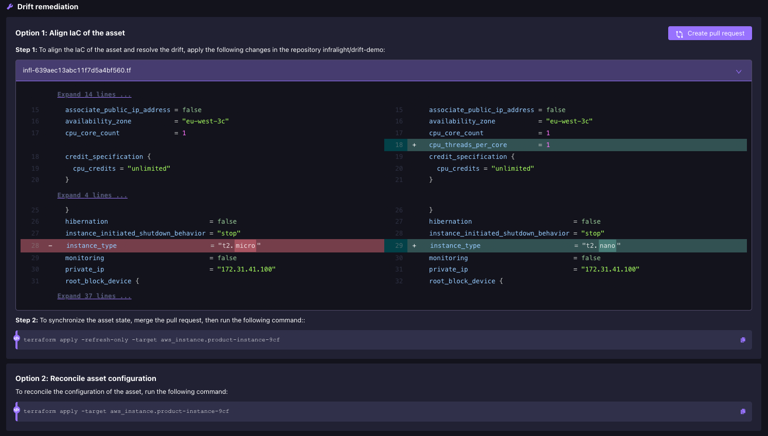

- Four leading platforms stand out: StackGen (balanced and developer-first), Firefly (compliance and codification), Spot by NetApp (cost optimization), and Scalr (IaC governance), each excelling in different areas.

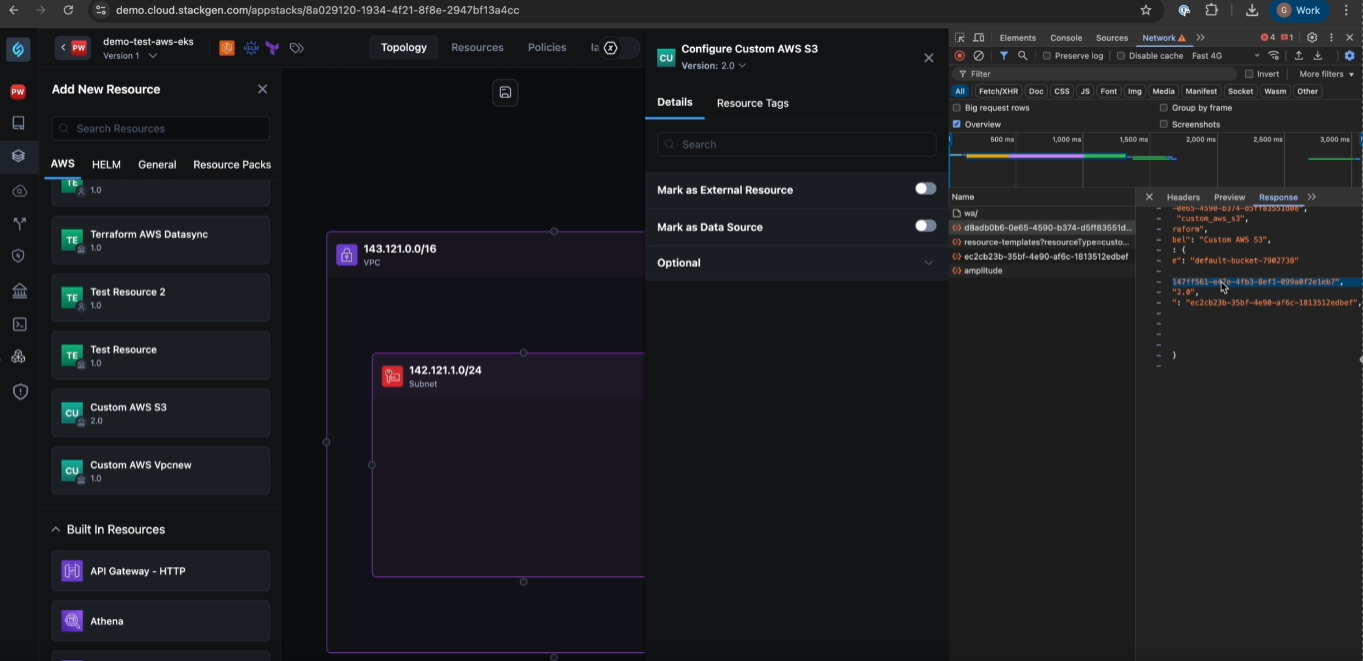

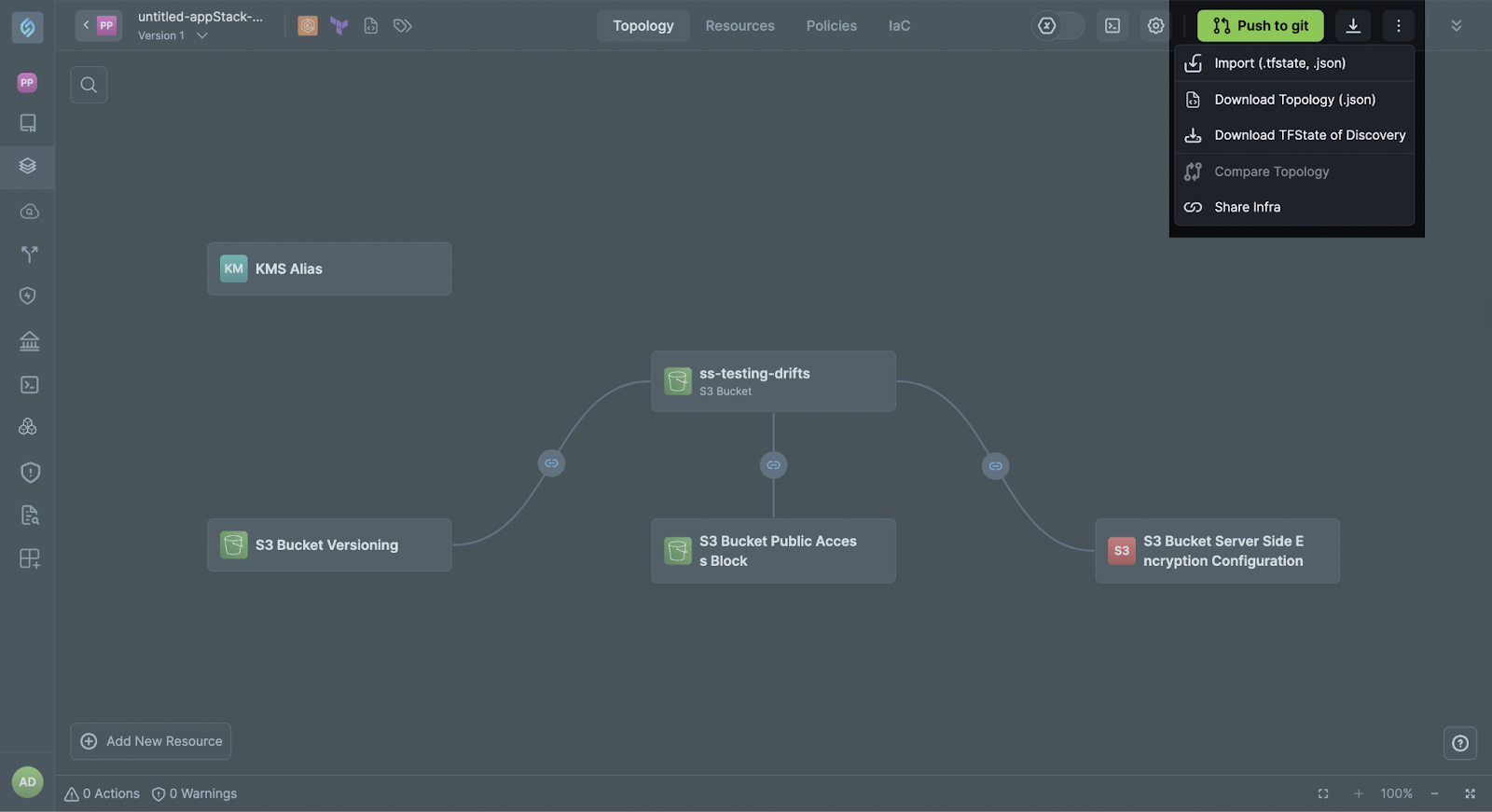

- StackGen is gaining traction because it strikes a balance between governance and developer autonomy, offering both policy-driven oversight and seamless integration into CI/CD workflows without introducing bottlenecks.

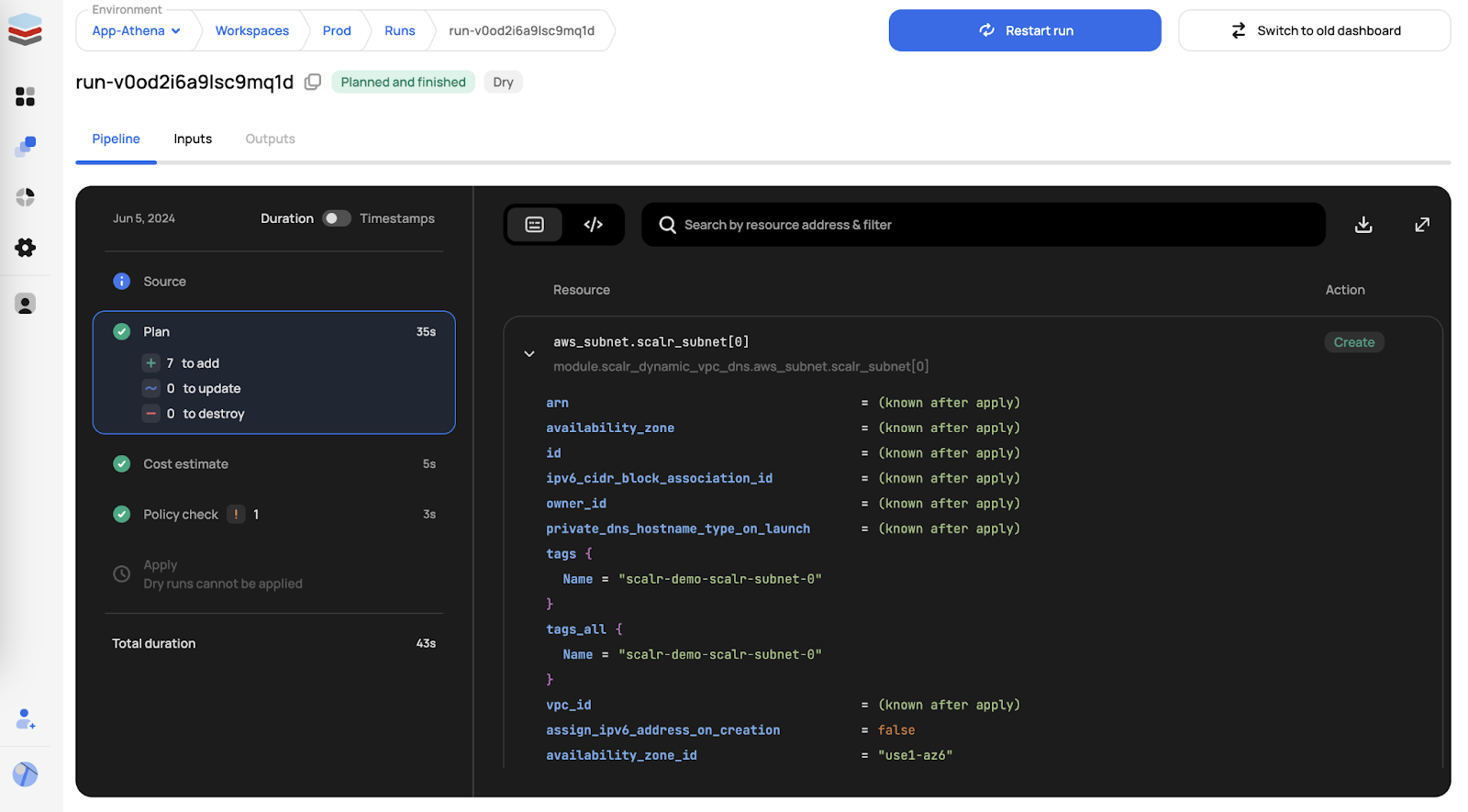

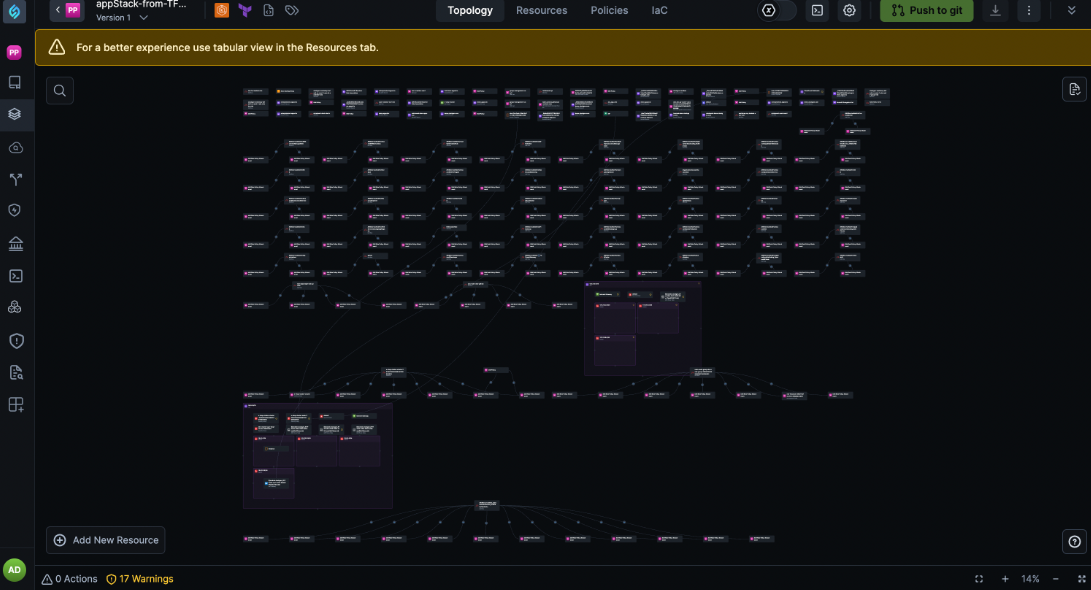

Large .tfstate file

Large .tfstate file

Overview

Overview

Overview

Overview