Why AI-Generated Infrastructure Without Governance Is a Risk

There’s a tempting argument making the rounds: “If AI can write infrastructure code as well as humans, why not just let developers use Claude Code or Cursor to generate their Terraform? Why do we need platform teams at all?”

It’s an appealing vision. Developers describe what they need in natural language. AI generates perfectly valid Terraform. Infrastructure appears instantly. No tickets, no waiting, no platform team bottleneck. Pure velocity.

But this approach is the infrastructure equivalent of letting every developer pull random packages from npm without any governance. It might look productive in week one. By month three, you’ll be drowning in chaos.

The question isn’t whether AI can generate infrastructure code—it absolutely can. The question is whether ungoverned AI-generated infrastructure creates more value than it destroys. For most enterprises, the answer is no.

The Illusion of Control

Let’s start with the most seductive part: it works. Brilliantly. At first.

A developer needs a database. They open Claude Code, describe their requirements, and within minutes have working Terraform that provisions an RDS instance. It deploys. It works.

Within weeks, you have dozens of AI-generated infrastructure components. Your velocity metrics look incredible. Your developers are ecstatic.

Then reality hits.

That RDS instance? It’s in a public subnet with a security group allowing inbound traffic from anywhere. The AI generated syntactically correct code, but didn’t know your organization’s network isolation requirements.

That RDS instance? It’s in a public subnet with a security group allowing inbound traffic from anywhere. The AI generated syntactically correct code, but didn’t know your organization’s network isolation requirements.

The S3 bucket? No versioning, no lifecycle policies, and costs spiraling because the AI chose the wrong storage class.

The Lambda function? Outdated runtime, no observability, not integrated with centralized logging.

The code works. But “works” and “works correctly in an enterprise context” are very different things.

The System of Record Problem

Here’s the critical question: who is managing the system of record?

When developers use AI to generate infrastructure ad-hoc, you end up with dozens of independent Terraform state files scattered across repositories. Each represents an isolated piece with its own lifecycle and failure modes.

Need to audit infrastructure for security compliance? You can’t. There’s no central inventory.

Need to implement a company-wide policy change—encrypting all data with a specific KMS key? You must hunt down every AI-generated Terraform file.

A developer who generated infrastructure leaves the company? Their Terraform state becomes tribal knowledge.

Two developers’ AI-generated infrastructure needs to interact? They’ve made incompatible choices about networking or resource sharing.

The AI generated perfectly valid code. But valid code in isolation doesn’t create a coherent system.

This is the same lesson the industry learned with open source. Developers can pull any package from public repositories, but enterprises that survived “dependency hell” learned they needed governance: dependency management, security scanning, version pinning.

This is the same lesson the industry learned with open source. Developers can pull any package from public repositories, but enterprises that survived “dependency hell” learned they needed governance: dependency management, security scanning, version pinning.

AI-generated infrastructure without governance is dependency hell for the cloud era.

When Things Go Wrong

The real test isn’t how well infrastructure works when things go right—it’s how well it fails when things go wrong.

A developer uses AI to generate Terraform for a three-tier web application. It deploys. It works. Six months later: production incident. Database connections timing out. The developer is on vacation.

Who can fix this? The on-call engineer doesn’t know this infrastructure exists—never added to central inventory. They can’t find the Terraform state. They discover the AI-generated Terraform is a 1,000-line monolith without clear module boundaries.

The database is undersized, but they can’t resize it without downtime because the AI didn’t configure blue-green deployment.

The outage extends from hours to days. Not because the fix is complicated, but because nobody can safely change infrastructure generated in isolation without governance.

The outage extends from hours to days. Not because the fix is complicated, but because nobody can safely change infrastructure generated in isolation without governance.

This isn’t hypothetical. This is the default outcome of ungoverned AI-generated infrastructure.

The Hidden Cost of Apparent Productivity

Organizations adopting “just let developers use AI” often celebrate dramatic productivity gains. But they’re measuring input (infrastructure created) rather than output (value delivered safely).

The real costs appear later:

Security incidents from infrastructure that didn’t follow compliance requirements

Cost overruns from non-optimized defaults that are expensive at scale

Operational chaos from infrastructure only maintainable by its creator

Technical debt from dozens of different patterns solving the same problems

Team friction when AI-generated infrastructure can’t interoperate

You haven’t eliminated the platform team—you’ve made every developer an amateur platform engineer. AI makes them productive amateurs, but amateur is still amateur.

The Collaboration Question

Infrastructure isn’t solo work—it’s team sport. AI-generated infrastructure, by default, is profoundly solo.

When platform teams build modules, they encode organizational knowledge: “This is how we do databases. This is how we do networking.” Future developers inherit these patterns instead of rediscovering them.

When developers use AI ad-hoc, they make isolated decisions optimized for immediate needs without visibility into broader impact.

What happens when payments team’s AI-generated infrastructure must connect to user management’s? Incompatible networking models. Different authentication patterns. Conflicting security rules.

What happens when implementing a cross-cutting concern like distributed tracing? With governed infrastructure, update shared modules. With ungoverned AI-generated infrastructure, find and modify hundreds of independent configurations.

AI is brilliant at generating code. AI is terrible at generating alignment.

The False Choice

The framing shouldn’t be “AI-generated infrastructure vs. traditional infrastructure.” That’s a false choice.

The real question: how do we harness AI’s code generation while maintaining governance, standards, and collaboration?

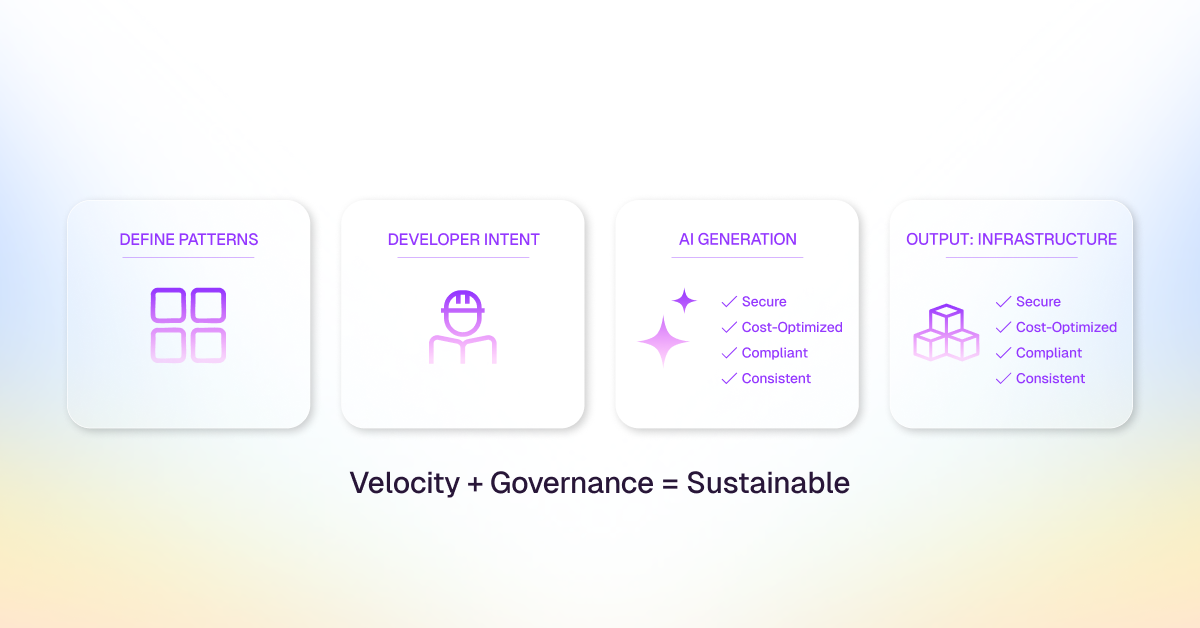

This is where governed AI-generated infrastructure becomes critical. Platform teams define patterns encoding security policies, cost optimization, networking standards. Developers describe intent. AI generates infrastructure matching intent while conforming to organizational patterns.

The code is still AI-generated. The velocity is still there. But instead of chaos, you get consistency. Instead of isolation, alignment. Instead of amateur platform engineering, guided infrastructure creation.

The Open Source Parallel

The history of open source provides a perfect parallel.

Early 2000s: developers wanted open source libraries. Security teams wanted to ban them. The compromise wasn’t “let developers use any open source” or “ban open source.” It was governed open source: curated registries, security scanning, approval workflows.

Organizations trying “just let developers use open source” suffered catastrophic incidents (Heartbleed, Shellshock, Log4Shell). Those banning it lost competitive velocity.

Winners built governance: Artifactory for curated dependencies, Snyk for security scanning, processes for vetting packages.

We’re at the same inflection point with AI-generated infrastructure. The winners will build governance: curated module catalogs, policy enforcement at design time, patterns guiding AI toward infrastructure that’s not just working but working correctly.

The Path Forward

The goal isn’t to prevent developers from using AI for infrastructure. The goal is to channel that AI into creating infrastructure that’s secure, cost-effective, maintainable, and aligned with organizational standards.

This requires platform teams to evolve. Not to become gatekeepers who slow everything down, but to become architects who build the guardrails within which AI can operate safely.

It requires recognizing that “AI can generate code” doesn’t mean “AI should generate ungoverned infrastructure.” The former is a capability. The latter is a liability.

It requires understanding that velocity without governance isn’t progress—it’s just accumulating technical debt faster.

The future of infrastructure isn’t manual provisioning by platform teams. But it’s also not ungoverned AI generation by developers. It’s governed, AI-accelerated infrastructure creation where platform teams define patterns and AI helps developers implement them.

The future of infrastructure isn’t manual provisioning by platform teams. But it’s also not ungoverned AI generation by developers. It’s governed, AI-accelerated infrastructure creation where platform teams define patterns and AI helps developers implement them.

That’s not a compromise. That’s the only sustainable path forward.

Ready to Implement Governed AI Infrastructure?

StackGen’s platform enables exactly this balance—AI-accelerated infrastructure provisioning within guardrails defined by your platform team. Developers get the velocity they demand. Platform teams get the governance they require. Learn how StackGen works or request a demo to see governed AI infrastructure in action.

Related Reading

About StackGen:

StackGen is the pioneer in Autonomous Infrastructure Platform (AIP) technology, helping enterprises transition from manual Infrastructure-as-Code (IaC) management to fully autonomous operations. Founded by infrastructure automation experts and headquartered in the San Francisco Bay Area, StackGen serves leading companies across technology, financial services, manufacturing, and entertainment industries.